Export Teleport Audit Events to the Elastic Stack

Teleport's Event Handler plugin receives audit events from the Teleport Auth Service and forwards them to your log management solution, letting you perform historical analysis, detect unusual behavior, and form a better understanding of how users interact with your Teleport cluster.

In this guide, we will show you how to configure Teleport's Event Handler plugin to send your Teleport audit events to the Elastic Stack. In this setup, the Event Handler plugin forwards audit events from Teleport to Logstash, which stores them in Elasticsearch for visualization and alerting in Kibana.

Prerequisites

-

A running Teleport cluster version 15.4.22 or above. If you want to get started with Teleport, sign up for a free trial or set up a demo environment.

-

The

tctladmin tool andtshclient tool.On Teleport Enterprise, you must use the Enterprise version of

tctl, which you can download from your Teleport account workspace. Otherwise, visit Installation for instructions on downloadingtctlandtshfor Teleport Community Edition.

Recommended: Configure Machine ID to provide short-lived Teleport

credentials to the plugin. Before following this guide, follow a Machine ID

deployment guide

to run the tbot binary on your infrastructure.

-

Logstash version 8.4.1 or above running on a Linux host. In this guide, you will also run the Event Handler plugin on this host.

-

Elasticsearch and Kibana version 8.4.1 or above, either running via an Elastic Cloud account or on your own infrastructure. You will need permissions to create and manage users in Elasticsearch.

We have tested this guide on the Elastic Stack version 8.4.1.

Step 1/4. Set up the Event Handler plugin

The Event Handler plugin is a binary that runs independently of your Teleport cluster. It authenticates to your Teleport cluster and Logstash using mutual TLS. In this section, you will install the Event Handler plugin on the Linux host where you are running Logstash and generate credentials that the plugin will use for authentication.

Install the Event Handler plugin

Follow the instructions for your environment to install the Event Handler plugin on your Logstash host:

- Linux

- macOS

- Docker

- Helm

- Build via Go

$ curl -L -O https://get.gravitational.com/teleport-event-handler-v15.4.22-linux-amd64-bin.tar.gz

$ tar -zxvf teleport-event-handler-v15.4.22-linux-amd64-bin.tar.gz

$ sudo ./teleport-event-handler/install

We currently only build the Event Handler plugin for amd64 machines. For ARM architecture, you can build from source.

$ curl -L -O https://get.gravitational.com/teleport-event-handler-v15.4.22-darwin-amd64-bin.tar.gz

$ tar -zxvf teleport-event-handler-v15.4.22-darwin-amd64-bin.tar.gz

$ sudo ./teleport-event-handler/install

We currently only build the event handler plugin for amd64 machines. If your macOS machine uses Apple silicon, you will need to install Rosetta before you can run the event handler plugin. You can also build from source.

Ensure that you have Docker installed and running.

$ docker pull public.ecr.aws/gravitational/teleport-plugin-event-handler:15.4.22

To allow Helm to install charts that are hosted in the Teleport Helm repository, use helm repo add:

$ helm repo add teleport https://charts.releases.teleport.dev

To update the cache of charts from the remote repository, run helm repo update:

$ helm repo update

You will need Go >= 1.21 installed.

Run the following commands on your Universal Forwarder host:

$ git clone https://github.com/gravitational/teleport.git --depth 1 -b branch/v15

$ cd teleport/integrations/event-handler

$ git checkout 15.4.22

$ make build

The resulting executable will have the name event-handler. To follow the

rest of this guide, rename this file to teleport-event-handler and move it

to /usr/local/bin.

Generate a starter config file

Generate a configuration file with placeholder values for the Teleport Event Handler plugin. Later in this guide, we will edit the configuration file for your environment.

- Cloud-Hosted

- Self-Hosted

- Helm Chart

Run the configure command to generate a sample configuration. Replace

mytenant.teleport.sh with the DNS name of your Teleport Enterprise Cloud

tenant:

$ teleport-event-handler configure . mytenant.teleport.sh:443

Run the configure command to generate a sample configuration. Replace

teleport.example.com:443 with the DNS name and HTTPS port of Teleport's Proxy

Service:

$ teleport-event-handler configure . teleport.example.com:443

Run the configure command to generate a sample configuration. Assign

TELEPORT_CLUSTER_ADDRESS to the DNS name and port of your Teleport Auth

Service or Proxy Service:

$ TELEPORT_CLUSTER_ADDRESS=mytenant.teleport.sh:443

$ docker run -v `pwd`:/opt/teleport-plugin -w /opt/teleport-plugin public.ecr.aws/gravitational/teleport-plugin-event-handler:15.4.22 configure . ${TELEPORT_CLUSTER_ADDRESS?}

In order to export audit events, you'll need to have the root certificate and the client credentials available as a secret. Use the following command to create that secret in Kubernetes:

$ kubectl create secret generic teleport-event-handler-client-tls --from-file=ca.crt=ca.crt,client.crt=client.crt,client.key=client.key

This will pack the content of ca.crt, client.crt, and client.key into the

secret so the Helm chart can mount them to their appropriate path.

You'll see the following output:

Teleport event handler 15.4.22

[1] mTLS Fluentd certificates generated and saved to ca.crt, ca.key, server.crt, server.key, client.crt, client.key

[2] Generated sample teleport-event-handler role and user file teleport-event-handler-role.yaml

[3] Generated sample fluentd configuration file fluent.conf

[4] Generated plugin configuration file teleport-event-handler.toml

The plugin generates several setup files:

$ ls -l

# -rw------- 1 bob bob 1038 Jul 1 11:14 ca.crt

# -rw------- 1 bob bob 1679 Jul 1 11:14 ca.key

# -rw------- 1 bob bob 1042 Jul 1 11:14 client.crt

# -rw------- 1 bob bob 1679 Jul 1 11:14 client.key

# -rw------- 1 bob bob 541 Jul 1 11:14 fluent.conf

# -rw------- 1 bob bob 1078 Jul 1 11:14 server.crt

# -rw------- 1 bob bob 1766 Jul 1 11:14 server.key

# -rw------- 1 bob bob 260 Jul 1 11:14 teleport-event-handler-role.yaml

# -rw------- 1 bob bob 343 Jul 1 11:14 teleport-event-handler.toml

| File(s) | Purpose |

|---|---|

ca.crt and ca.key | Self-signed CA certificate and private key for Fluentd |

server.crt and server.key | Fluentd server certificate and key |

client.crt and client.key | Fluentd client certificate and key, all signed by the generated CA |

teleport-event-handler-role.yaml | user and role resource definitions for Teleport's event handler |

fluent.conf | Fluentd plugin configuration |

Running the Event Handler separately from the log forwarder

This guide assumes that you are running the Event Handler on the same host or

Kubernetes pod as your log forwarder. If you are not, you will need to instruct

the Event Handler to generate mTLS certificates for subjects besides

localhost. To do this, use the --cn and --dns-names flags of the

teleport-event-handler configure command.

For example, if your log forwarder is addressable at forwarder.example.com and the

Event Handler at handler.example.com, you would run the following configure

command:

$ teleport-event-handler configure --cn=handler.example.com --dns-names=forwarder.example.com

The command generates client and server certificates with the subjects set to

the value of --cn.

The --dns-names flag accepts a comma-separated list of DNS names. It will

append subject alternative names (SANs) to the server certificate (the one you

will provide to your log forwarder) for each DNS name in the list. The Event

Handler looks up each DNS name before appending it as an SAN and exits with an

error if the lookup fails.

We'll re-purpose the files generated for Fluentd in our Logstash configuration.

Define RBAC resources

The teleport-event-handler configure command generated a file called

teleport-event-handler-role.yaml. This file defines a teleport-event-handler

role and a user with read-only access to the event API:

kind: role

metadata:

name: teleport-event-handler

spec:

allow:

rules:

- resources: ['event', 'session']

verbs: ['list','read']

version: v5

---

kind: user

metadata:

name: teleport-event-handler

spec:

roles: ['teleport-event-handler']

version: v2

Move this file to your workstation (or recreate it by pasting the snippet above)

and use tctl on your workstation to create the role and the user:

$ tctl create -f teleport-event-handler-role.yaml

# user "teleport-event-handler" has been created

# role 'teleport-event-handler' has been created

Using tctl on the Logstash host?

If you are running Teleport on your Elastic Stack host, e.g., you are exposing

Kibana's HTTP endpoint via the Teleport Application Service, running the tctl create command above will generate an error similar to the following:

ERROR: tctl must be either used on the auth server or provided with the identity file via --identity flag

To avoid this error, create the teleport-event-handler-role.yaml file on your

workstation, then sign in to your Teleport cluster and run the tctl command

locally.

Enable issuing of credentials for the Event Handler role

- Machine ID

- Long-lived identity files

With the role created, you now need to allow the Machine ID bot to produce credentials for this role.

This can be done with tctl, replacing my-bot with the name of your bot:

$ tctl bots update my-bot --add-roles teleport-event-handler

In order for the Event Handler plugin to forward events from your Teleport

cluster, it needs signed credentials from the cluster's certificate authority.

The teleport-event-handler user cannot request this itself, and requires

another user to impersonate this account in order to request credentials.

Create a role that enables your user to impersonate the teleport-event-handler

user. First, paste the following YAML document into a file called

teleport-event-handler-impersonator.yaml:

kind: role

version: v5

metadata:

name: teleport-event-handler-impersonator

spec:

options:

# max_session_ttl defines the TTL (time to live) of SSH certificates

# issued to the users with this role.

max_session_ttl: 10h

# This section declares a list of resource/verb combinations that are

# allowed for the users of this role. By default nothing is allowed.

allow:

impersonate:

users: ["teleport-event-handler"]

roles: ["teleport-event-handler"]

Next, create the role:

$ tctl create teleport-event-handler-impersonator.yaml

Add this role to the user that generates signed credentials for the Event Handler:

Assign the teleport-event-handler-impersonator role to your Teleport user by running the appropriate

commands for your authentication provider:

- Local User

- GitHub

- SAML

- OIDC

-

Retrieve your local user's roles as a comma-separated list:

$ ROLES=$(tsh status -f json | jq -r '.active.roles | join(",")') -

Edit your local user to add the new role:

$ tctl users update $(tsh status -f json | jq -r '.active.username') \

--set-roles "${ROLES?},teleport-event-handler-impersonator" -

Sign out of the Teleport cluster and sign in again to assume the new role.

-

Retrieve your

githubauthentication connector:$ tctl get github/github --with-secrets > github.yamlNote that the

--with-secretsflag adds the value ofspec.signing_key_pair.private_keyto thegithub.yamlfile. Because this key contains a sensitive value, you should remove the github.yaml file immediately after updating the resource. -

Edit

github.yaml, addingteleport-event-handler-impersonatorto theteams_to_rolessection.The team you should map to this role depends on how you have designed your organization's role-based access controls (RBAC). However, the team must include your user account and should be the smallest team possible within your organization.

Here is an example:

teams_to_roles:

- organization: octocats

team: admins

roles:

- access

+ - teleport-event-handler-impersonator -

Apply your changes:

$ tctl create -f github.yaml -

Sign out of the Teleport cluster and sign in again to assume the new role.

-

Retrieve your

samlconfiguration resource:$ tctl get --with-secrets saml/mysaml > saml.yamlNote that the

--with-secretsflag adds the value ofspec.signing_key_pair.private_keyto thesaml.yamlfile. Because this key contains a sensitive value, you should remove the saml.yaml file immediately after updating the resource. -

Edit

saml.yaml, addingteleport-event-handler-impersonatorto theattributes_to_rolessection.The attribute you should map to this role depends on how you have designed your organization's role-based access controls (RBAC). However, the group must include your user account and should be the smallest group possible within your organization.

Here is an example:

attributes_to_roles:

- name: "groups"

value: "my-group"

roles:

- access

+ - teleport-event-handler-impersonator -

Apply your changes:

$ tctl create -f saml.yaml -

Sign out of the Teleport cluster and sign in again to assume the new role.

-

Retrieve your

oidcconfiguration resource:$ tctl get oidc/myoidc --with-secrets > oidc.yamlNote that the

--with-secretsflag adds the value ofspec.signing_key_pair.private_keyto theoidc.yamlfile. Because this key contains a sensitive value, you should remove the oidc.yaml file immediately after updating the resource. -

Edit

oidc.yaml, addingteleport-event-handler-impersonatorto theclaims_to_rolessection.The claim you should map to this role depends on how you have designed your organization's role-based access controls (RBAC). However, the group must include your user account and should be the smallest group possible within your organization.

Here is an example:

claims_to_roles:

- name: "groups"

value: "my-group"

roles:

- access

+ - teleport-event-handler-impersonator -

Apply your changes:

$ tctl create -f oidc.yaml -

Sign out of the Teleport cluster and sign in again to assume the new role.

Export the access plugin identity

Give the plugin access to a Teleport identity file. We recommend using Machine

ID for this in order to produce short-lived identity files that are less

dangerous if exfiltrated, though in demo deployments, you can generate

longer-lived identity files with tctl:

- Machine ID

- Long-lived identity files

Configure tbot with an output that will produce the credentials needed by

the plugin. As the plugin will be accessing the Teleport API, the correct

output type to use is identity.

For this guide, the directory destination will be used. This will write these

credentials to a specified directory on disk. Ensure that this directory can

be written to by the Linux user that tbot runs as, and that it can be read by

the Linux user that the plugin will run as.

Modify your tbot configuration to add an identity output.

If running tbot on a Linux server, use the directory output to write

identity files to the /opt/machine-id directory:

outputs:

- type: identity

destination:

type: directory

# For this guide, /opt/machine-id is used as the destination directory.

# You may wish to customize this. Multiple outputs cannot share the same

# destination.

path: /opt/machine-id

If running tbot on Kubernetes, write the identity file to Kubernetes secret

instead:

outputs:

- type: identity

destination:

type: kubernetes_secret

name: teleport-event-handler-identity

If operating tbot as a background service, restart it. If running tbot in

one-shot mode, execute it now.

You should now see an identity file under /opt/machine-id or a Kubernetes

secret named teleport-event-handler-identity. This contains the private key and signed

certificates needed by the plugin to authenticate with the Teleport Auth

Service.

Like all Teleport users, teleport-event-handler needs signed credentials in order to

connect to your Teleport cluster. You will use the tctl auth sign command to

request these credentials.

The following tctl auth sign command impersonates the teleport-event-handler user,

generates signed credentials, and writes an identity file to the local

directory:

$ tctl auth sign --user=teleport-event-handler --out=identity

The plugin connects to the Teleport Auth Service's gRPC endpoint over TLS.

The identity file, identity, includes both TLS and SSH credentials. The

plugin uses the SSH credentials to connect to the Proxy Service, which

establishes a reverse tunnel connection to the Auth Service. The plugin

uses this reverse tunnel, along with your TLS credentials, to connect to the

Auth Service's gRPC endpoint.

Certificate Lifetime

By default, tctl auth sign produces certificates with a relatively short

lifetime. For production deployments, we suggest using Machine

ID to programmatically issue and renew

certificates for your plugin. See our Machine ID getting started

guide to learn more.

Note that you cannot issue certificates that are valid longer than your existing credentials.

For example, to issue certificates with a 1000-hour TTL, you must be logged in with a session that is

valid for at least 1000 hours. This means your user must have a role allowing

a max_session_ttl of at least 1000 hours (60000 minutes), and you must specify a --ttl

when logging in:

$ tsh login --proxy=teleport.example.com --ttl=60060

If you are running the plugin on a Linux server, create a data directory to hold certificate files for the plugin:

$ sudo mkdir -p /var/lib/teleport/api-credentials

$ sudo mv identity /var/lib/teleport/plugins/api-credentials

If you are running the plugin on Kubernetes, Create a Kubernetes secret that contains the Teleport identity file:

$ kubectl -n teleport create secret generic --from-file=identity teleport-event-handler-identity

Once the Teleport credentials expire, you will need to renew them by running the

tctl auth sign command again.

Step 2/4. Configure a Logstash pipeline

The Event Handler plugin forwards audit logs from Teleport by sending HTTP requests to a user-configured endpoint. We will define a Logstash pipeline that handles these requests, extracts logs, and sends them to Elasticsearch.

Create a role for the Event Handler plugin

Your Logstash pipeline will require permissions to create and manage Elasticsearch indexes and index lifecycle management policies, plus get information about your Elasticsearch deployment. Create a role with these permissions so you can later assign it to the Elasticsearch user you will create for the Event Handler.

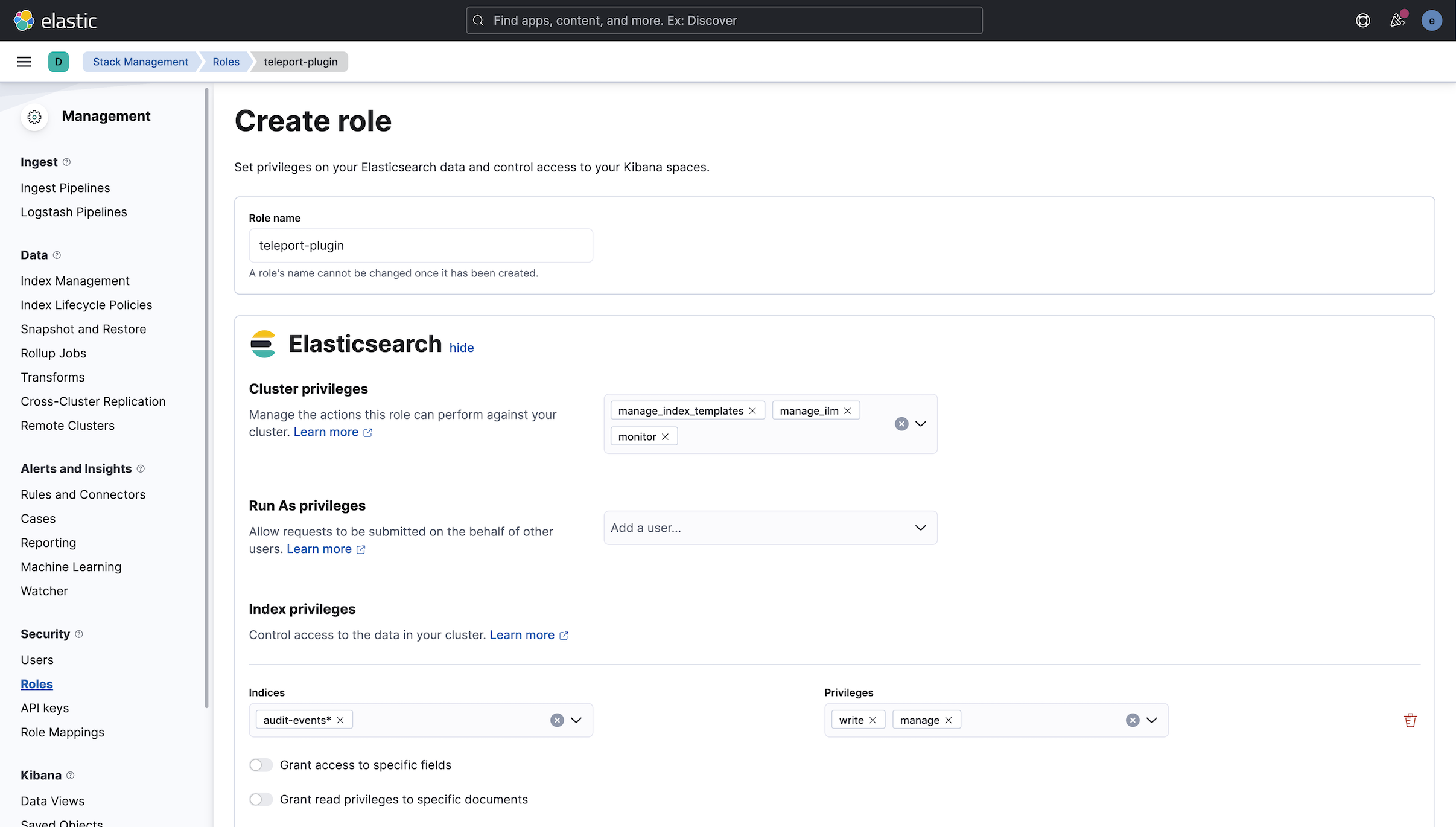

In Kibana, navigate to "Management" > "Roles" and click "Create role". Enter the

name teleport-plugin for the new role. Under the "Elasticsearch" section,

under "Cluster privileges", enter manage_index_templates, manage_ilm, and

monitor.

Under "Index privileges", define an entry with audit-events-* in the "Indices"

field and write and manage in the "Privileges" field. Click "Create role".

Create an Elasticsearch user for the Event Handler

Create an Elasticsearch user that Logstash can authenticate as when making requests to the Elasticsearch API.

In Kibana, find the hamburger menu on the upper left and click "Management",

then "Users" > "Create user". Enter teleport for the "Username" and provide a

secure password.

Assign the user the teleport-plugin role we defined earlier.

Prepare TLS credentials for Logstash

Later in this guide, your Logstash pipeline will use an HTTP input to receive audit events from the Teleport Event Handler plugin.

Logstash's HTTP input can only sign certificates with a private key that uses

the unencrypted PKCS #8 format. When you ran teleport-event-handler configure

earlier, the command generated an encrypted RSA key. We will convert this key to

PKCS #8.

You will need a password to decrypt the RSA key. To retrieve this, execute the

following command in the directory where you ran teleport-event-handler configure:

$ cat fluent.conf | grep passphrase

private_key_passphrase "ffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff"

Convert the encrypted RSA key to an unencrypted PKCS #8 key. The command will prompt your for the password you retrieved:

$ openssl pkcs8 -topk8 -in server.key -nocrypt -out pkcs8.key

Enable Logstash to read the new key, plus the CA and certificate we generated earlier:

$ chmod +r pkcs8.key ca.crt server.crt

Define an index template

When the Event Handler plugin sends audit events to Logstash, Logstash needs to know how to parse these events to forward them to Elasticsearch. You can define this logic using an index template, which Elasticsearch uses to construct an index for data it receives.

Create a file called audit-events.json with the following content:

{

"index_patterns": ["audit-events-*"],

"template": {

"settings": {},

"mappings": {

"dynamic":"true"

}

}

}

This index template modifies any index with the pattern audit-events-*.

Because it includes the "dynamic": "true" setting, it instructs Elasticsearch

to define index fields dynamically based on the events it receives. This is

useful for Teleport audit events, which use a variety of fields depending on

the event type.

Define a Logstash pipeline

On the host where you are running Logstash, create a configuration file that

defines a Logstash pipeline. This pipeline will receive logs from port 9601

and forward them to Elasticsearch.

On the host running Logstash, create a file called

/etc/logstash/conf.d/teleport-audit.conf with the following content:

input {

http {

port => 9601

ssl => true

ssl_certificate => "/home/server.crt"

ssl_key => "/home/pkcs8.key"

ssl_certificate_authorities => [

"/home/ca.crt"

]

ssl_verify_mode => "force_peer"

}

}

output {

elasticsearch {

user => "teleport"

password => "ELASTICSEARCH_PASSPHRASE"

template_name => "audit-events"

template => "/home/audit-events.json"

index => "audit-events-%{+yyyy.MM.dd}"

template_overwrite => true

}

}

In the input.http section, update ssl_certificate and

ssl_certificate_authorities to include the locations of the server certificate

and certificate authority files that the teleport-event-handler configure

command generated earlier.

Logstash will authenticate client certificates against the CA file and present a signed certificate to the Teleport Event Handler plugin.

Edit the ssl_key field to include the path to the pkcs8.key file we

generated earlier.

In the output.elasticsearch section, edit the following fields depending on

whether you are using Elastic Cloud or your own Elastic Stack deployment:

- Elastic Cloud

- Self-Hosted

Assign cloud_auth to a string with the content teleport:PASSWORD, replacing

PASSWORD with the password you assigned to your teleport user earlier.

Visit https://cloud.elastic.co/deployments, find the "Cloud ID" field, copy

the content, and add it as the value of cloud_id in your Logstash pipeline

configuration. The elasticsearch section should resemble the following:

elasticsearch {

cloud_id => "CLOUD_ID"

cloud_auth => "teleport:PASSWORD"

template_name => "audit-events"

template => "/home/audit-events.json"

index => "audit-events-%{+yyyy.MM.dd}"

template_overwrite => true

}

Assign hosts to a string indicating the hostname of your Elasticsearch host.

Assign user to teleport and password to the passphrase you created for

your teleport user earlier.

The elasticsearch section should resemble the following:

elasticsearch {

hosts => "elasticsearch.example.com"

user => "teleport"

password => "PASSWORD"

template_name => "audit-events"

template => "/home/audit-events.json"

index => "audit-events-%{+yyyy.MM.dd}"

template_overwrite => true

}

Finally, modify template to point to the path to the audit-events.json file

you created earlier.

Because the index template we will create with this file applies to indices

with the prefix audit-events-*, and we have configured our Logstash pipeline

to create an index with the title "audit-events-%{+yyyy.MM.dd}, Elasticsearch

will automatically index fields from Teleport audit events.

Disable the Elastic Common Schema for your pipeline

The Elastic Common Schema (ECS) is a standard set of fields that Elastic Stack uses to parse and visualize data. Since we are configuring Elasticsearch to index all fields from your Teleport audit logs dynamically, we will disable the ECS for your Logstash pipeline.

On the host where you are running Logstash, edit /etc/logstash/pipelines.yml

to add the following entry:

- pipeline.id: teleport-audit-logs

path.config: "/etc/logstash/conf.d/teleport-audit.conf"

pipeline.ecs_compatibility: disabled

This disables the ECS for your Teleport audit log pipeline.

If your pipelines.yml file defines an existing pipeline that includes

teleport-audit.conf, e.g., by using a wildcard value in path.config, adjust

the existing pipeline definition so it no longer applies to

teleport-audit.conf.

Run the Logstash pipeline

Restart Logstash:

$ sudo systemctl restart logstash

Make sure your Logstash pipeline started successfully by running the following command to tail Logstash's logs:

$ sudo journalctl -u logstash -f

When your Logstash pipeline initializes its http input and starts running, you

should see a log similar to this:

Sep 15 18:27:13 myhost logstash[289107]: [2022-09-15T18:27:13,491][INFO ][logstash.inputs.http][main][33bdff0416b6a2b643e6f4ab3381a90c62b3aa05017770f4eb9416d797681024] Starting http input listener {:address=>"0.0.0.0:9601", :ssl=>"true"}

These logs indicate that your Logstash pipeline has connected to Elasticsearch and installed a new index template:

Sep 12 19:49:06 myhost logstash[33762]: [2022-09-12T19:49:06,309][INFO ][logstash.outputs.elasticsearch][main] Elasticsearch version determined (8.4.1) {:es_version=>8}

Sep 12 19:50:00 myhost logstash[33762]: [2022-09-12T19:50:00,993][INFO ][logstash.outputs.elasticsearch][main] Installing Elasticsearch template {:name=>"audit-events"}

Pipeline not starting?

If Logstash fails to initialize the pipeline, it may continue to attempt to contact Elasticsearch. In that case, you will see repeated logs like the one below:

Sep 12 19:43:04 myhost logstash[33762]: [2022-09-12T19:43:04,519][WARN ][logstash.outputs.elasticsearch][main] Attempted to resurrect connection to dead ES instance, but got an error {:url=>"http://teleport:xxxxxx@127.0.0.1:9200/", :exception=>LogStash::Outputs::ElasticSearch::HttpClient::Pool::HostUnreachableError, :message=>"Elasticsearch Unreachable: [http://127.0.0.1:9200/][Manticore::ClientProtocolException] 127.0.0.1:9200 failed to respond"}

Diagnosing the problem

To diagnose the cause of errors initializing your Logstash pipeline, search your

Logstash journalctl logs for the following, which indicate that the pipeline is

starting. The relevant error logs should come shortly after these:

Sep 12 18:15:52 myhost logstash[27906]: [2022-09-12T18:15:52,146][INFO][logstash.javapipeline][main] Starting pipeline {:pipeline_id=>"main","pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50,"pipeline.max_inflight"=>250,"pipeline.sources"=>["/etc/logstash/conf.d/teleport-audit.conf"],:thread=>"#<Thread:0x1c1a3ee5 run>"}

Sep 12 18:15:52 myhost logstash[27906]: [2022-09-12T18:15:52,912][INFO][logstash.javapipeline][main] Pipeline Java execution initialization time {"seconds"=>0.76}

Disabling Elasticsearch TLS

This guide assumes that you have already configured Elasticsearch and Logstash to communicate with one another via TLS.

If your Elastic Stack deployment is in a sandboxed or low-security environment

(e.g., a demo environment), and your journalctl logs for Logstash show that

Elasticsearch is unreachable, you can disable TLS for communication between

Logstash and Elasticsearch.

Edit the file /etc/elasticsearch/elasticsearch.yml to set

xpack.security.http.ssl.enabled to false, then restart Elasticsearch.

Step 3/4. Run the Event Handler plugin

Configure the Teleport Event Handler

In this section, you will configure the Teleport Event Handler for your environment.

- Linux server

- Helm Chart

Earlier, we generated a file called teleport-event-handler.toml to configure

the Fluentd event handler. This file includes setting similar to the following:

storage = "./storage"

timeout = "10s"

batch = 20

namespace = "default"

# The window size configures the duration of the time window for the event handler

# to request events from Teleport. By default, this is set to 24 hours.

# Reduce the window size if the events backend cannot manage the event volume

# for the default window size.

# The window size should be specified as a duration string, parsed by Go's time.ParseDuration.

window-size = "24h"

[forward.fluentd]

ca = "/home/bob/event-handler/ca.crt"

cert = "/home/bob/event-handler/client.crt"

key = "/home/bob/event-handler/client.key"

url = "https://fluentd.example.com:8888/test.log"

session-url = "https://fluentd.example.com:8888/session"

[teleport]

addr = "example.teleport.com:443"

identity = "identity"

Modify the configuration to replace fluentd.example.com with the domain name

of your Fluentd deployment.

Use the following template to create teleport-plugin-event-handler-values.yaml:

eventHandler:

storagePath: "./storage"

timeout: "10s"

batch: 20

namespace: "default"

# The window size configures the duration of the time window for the event handler

# to request events from Teleport. By default, this is set to 24 hours.

# Reduce the window size if the events backend cannot manage the event volume

# for the default window size.

# The window size should be specified as a duration string, parsed by Go's time.ParseDuration.

windowSize: "24h"

teleport:

address: "example.teleport.com:443"

identitySecretName: teleport-event-handler-identity

identitySecretPath: identity

fluentd:

url: "https://fluentd.fluentd.svc.cluster.local/events.log"

sessionUrl: "https://fluentd.fluentd.svc.cluster.local/session.log"

certificate:

secretName: "teleport-event-handler-client-tls"

caPath: "ca.crt"

certPath: "client.crt"

keyPath: "client.key"

persistentVolumeClaim:

enabled: true

Update the configuration file as follows.

Change forward.fluentd.url to the scheme, host and port you configured for

your Logstash http input earlier, https://localhost:9601. Change

forward.fluentd.session-url to the same value with the root URL path:

https://localhost:9601/.

Change teleport.addr to the host and port of your Teleport Proxy Service, or

the Auth Service if you have configured the Event Handler to connect to it

directly, e.g., mytenant.teleport.sh:443.

- Executable or Docker

- Helm Chart

addr: Include the hostname and HTTPS port of your Teleport Proxy Service

or Teleport Enterprise Cloud account (e.g., teleport.example.com:443 or

mytenant.teleport.sh:443).

identity: Fill this in with the path to the identity file you exported

earlier.

client_key, client_crt, root_cas: Comment these out, since we

are not using them in this configuration.

address: Include the hostname and HTTPS port of your Teleport Proxy Service

or Teleport Enterprise Cloud tenant (e.g., teleport.example.com:443 or

mytenant.teleport.sh:443).

identitySecretName: Fill in the identitySecretName field with the name

of the Kubernetes secret you created earlier.

identitySecretPath: Fill in the identitySecretPath field with the path

of the identity file within the Kubernetes secret. If you have followed the

instructions above, this will be identity.

If you are providing credentials to the Event Handler using a tbot binary that

runs on a Linux server, make sure the value of identity in the Event Handler

configuration is the same as the path of the identity file you configured tbot

to generate, /opt/machine-id/identity.

Start the Teleport Event Handler

Start the Teleport Teleport Event Handler by following the instructions below.

- Linux server

- Helm chart

Copy the teleport-event-handler.toml file to /etc on your Linux server.

Update the settings within the toml file to match your environment. Make sure to

use absolute paths on settings such as identity and storage. Files

and directories in use should only be accessible to the system user executing

the teleport-event-handler service such as /var/lib/teleport-event-handler.

Next, create a systemd service definition at the path

/usr/lib/systemd/system/teleport-event-handler.service with the following

content:

[Unit]

Description=Teleport Event Handler

After=network.target

[Service]

Type=simple

Restart=always

ExecStart=/usr/local/bin/teleport-event-handler start --config=/etc/teleport-event-handler.toml --teleport-refresh-enabled=true

ExecReload=/bin/kill -HUP $MAINPID

PIDFile=/run/teleport-event-handler.pid

[Install]

WantedBy=multi-user.target

If you are not using Machine ID to provide short-lived credentials to the Event

Handler, you can remove the --teleport-refresh-enabled true flag.

Enable and start the plugin:

$ sudo systemctl enable teleport-event-handler

$ sudo systemctl start teleport-event-handler

Choose when to start exporting events

You can configure when you would like the Teleport Event Handler to begin

exporting events when you run the start command. This example will start

exporting from May 5th, 2021:

$ teleport-event-handler start --config /etc/teleport-event-handler.toml --start-time "2021-05-05T00:00:00Z"

You can only determine the start time once, when first running the Teleport

Event Handler. If you want to change the time frame later, remove the plugin

state directory that you specified in the storage field of the handler's

configuration file.

Once the Teleport Event Handler starts, you will see notifications about scanned and forwarded events:

$ sudo journalctl -u teleport-event-handler

DEBU Event sent id:f19cf375-4da6-4338-bfdc-e38334c60fd1 index:0 ts:2022-09-21

18:51:04.849 +0000 UTC type:cert.create event-handler/app.go:140

...

Run the following command on your workstation:

$ helm install teleport-plugin-event-handler teleport/teleport-plugin-event-handler \

--values teleport-plugin-event-handler-values.yaml \

--version 15.4.22

Step 4/4. Create a data view in Kibana

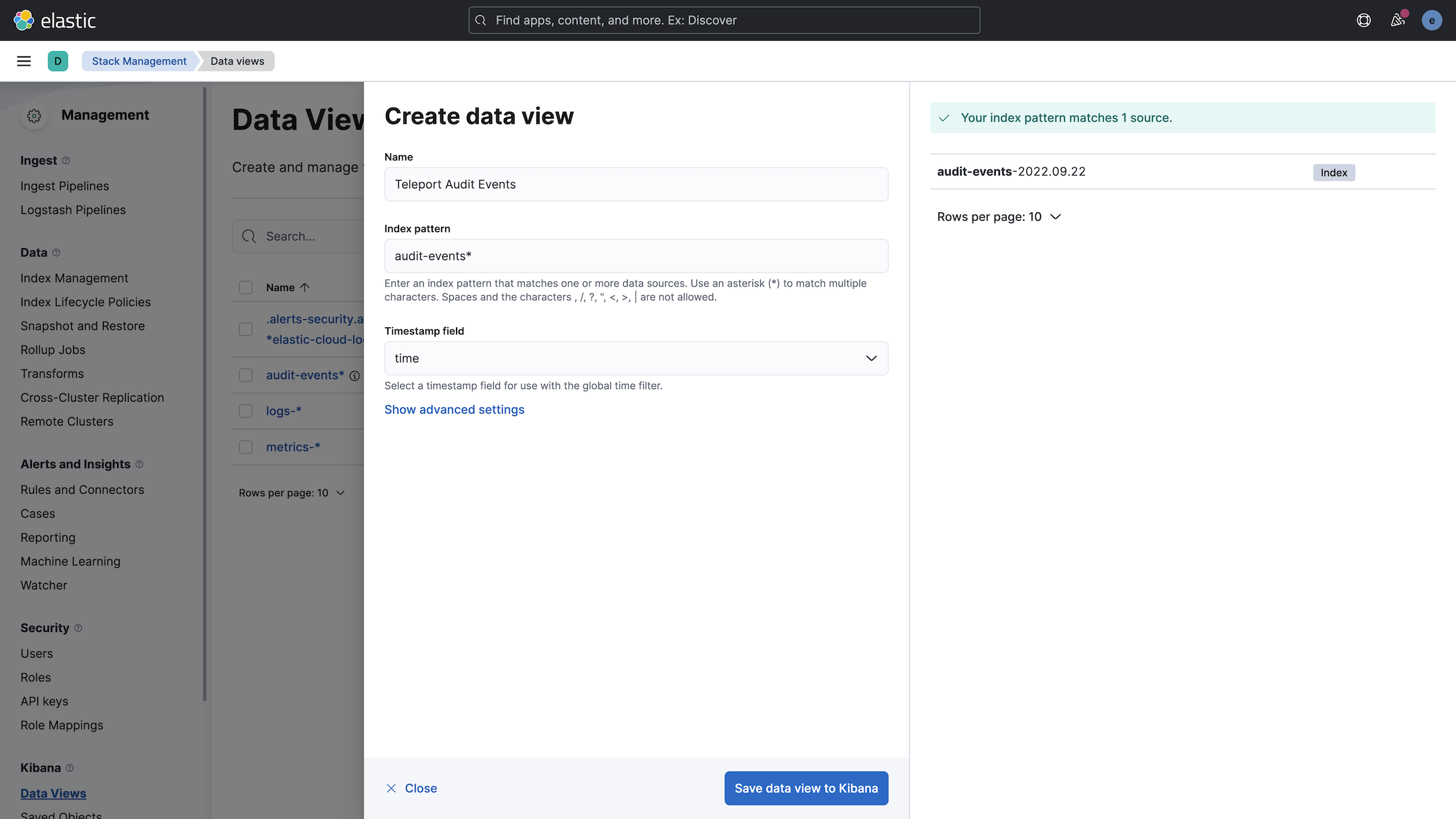

Make it possible to explore your Teleport audit events in Kibana by creating a data view. In the Elastic Stack UI, find the hamburger menu on the upper left of the screen, then click "Management" > "Data Views". Click "Create data view".

For the "Name" field, use "Teleport Audit Events". In "Index pattern", use

audit-events-* to select all indices created by our Logstash pipeline. In

"Timestamp field", choose time, which Teleport adds to its audit events.

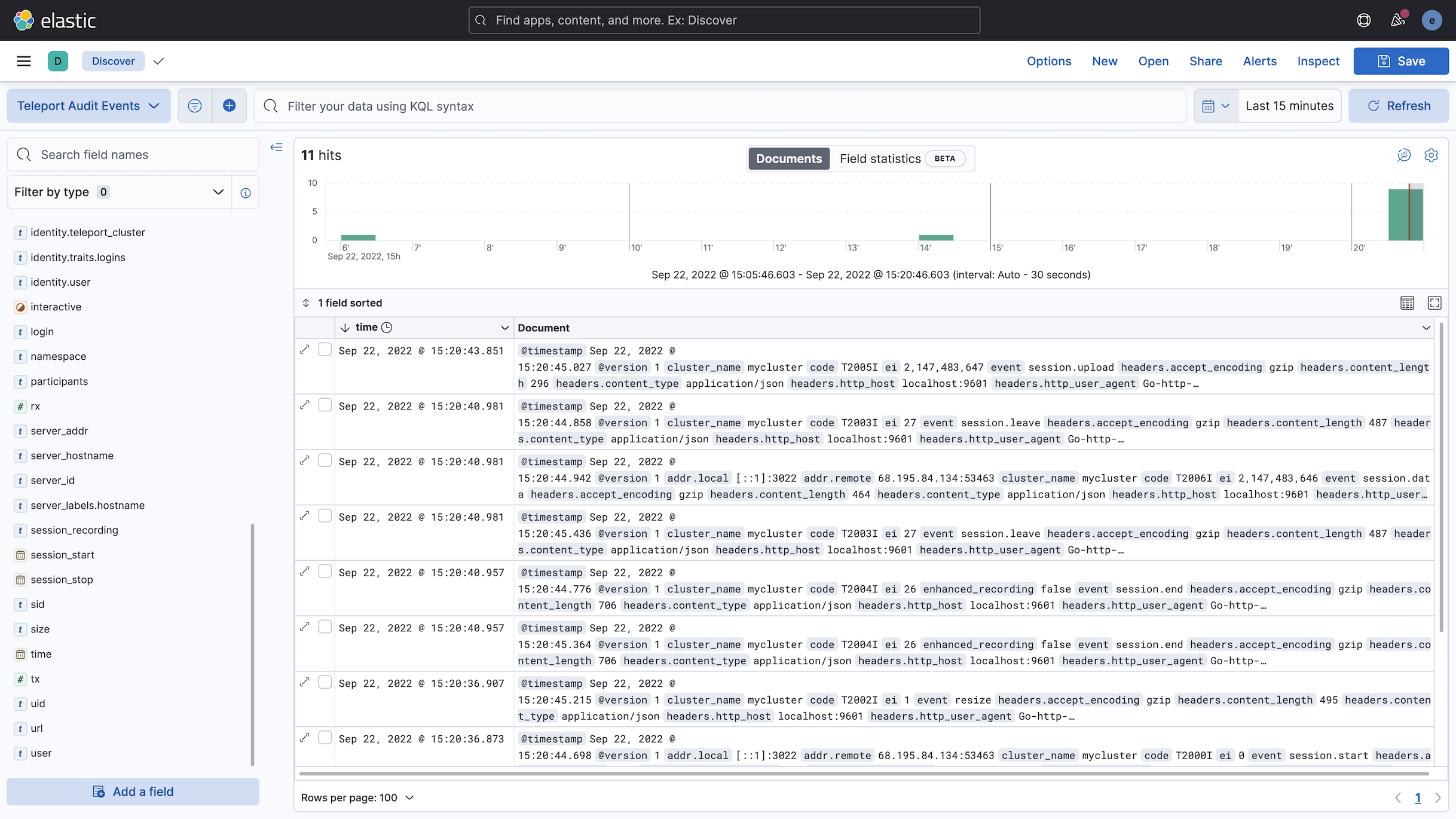

To use your data view, find the search box at the top of the Elastic Stack UI and enter "Discover". On the upper left of the screen, click the dropdown menu and select "Teleport Audit Events". You can now search and filter your Teleport audit events in order to get a better understanding how users are interacting with your Teleport cluster.

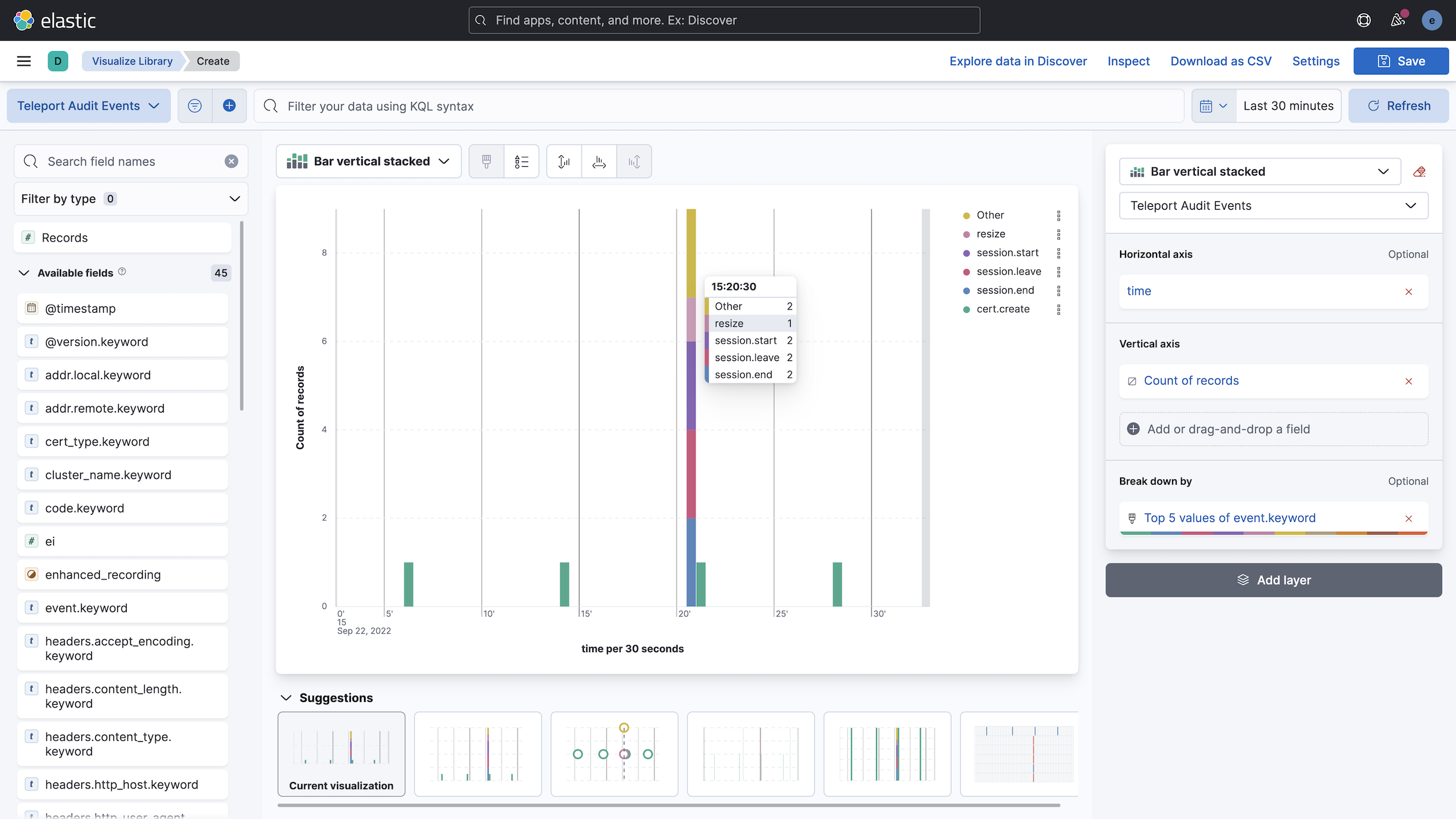

For example, we can click the event field on the left sidebar and visualize

the event types for your Teleport audit events over time:

Troubleshooting connection issues

If the Teleport Event Handler is displaying error logs while connecting to your Teleport Cluster, ensure that:

- The certificate the Teleport Event Handler is using to connect to your

Teleport cluster is not past its expiration date. This is the value of the

--ttlflag in thetctl auth signcommand, which is 12 hours by default. - Ensure that in your Teleport Event Handler configuration file

(

teleport-event-handler.toml), you have provided the correct host and port for the Teleport Proxy Service or Auth Service.

Next steps

Now that you are exporting your audit events to the Elastic Stack, consult our audit event reference so you can plan visualizations and alerts.

While this guide uses the tctl auth sign command to issue credentials for the

Teleport Event Handler, production clusters should use Machine ID for safer,

more reliable renewals. Read our guide

to getting started with Machine ID.